Advanced Technologies & Embedded

Natural language processing is a messy and complicated affair but modern advanced techniques are offering increasingly impressive results. Word embeddings are a modern machine learning technique that has taken the natural language processing world by storm. Results on most NLP tasks have shown improvements whenever word embeddings were used in some form or another.

With this article, we’re going to take a look at how word embeddings are computed, how they are able to at least partially capture language semantics, and how modern techniques use them to discover useful information across documents.

Word semantics

The so-called bag-of-words representation of text is a classical method in NLP that represents documents as simple collections of words not taking into account word order, sentence arrangement, or any other semantic information. Words are represented as numbers, usually a normalized count of how often they appear in a piece of text, and documents as vectors of such numbers. Variations of this method have been used for many decades and offer useful results despite their oversimplification of language. For example, most databases use this text representation in their full-text search implementations. However, these models, by design, are incapable of capturing word semantics.

Word embeddings offer a different approach to representing text. Vectors (the so-called embeddings) instead of just numbers are used to represent words. By design, this higher dimensionality is capable of capturing multiple facets of word meaning. The question, of course, becomes how to determine these vectors such that they actually do a useful job in capturing word semantics.

Word vectors

In 2013, Google introduced a model capable of learning word vectors in a very efficient way across a huge number of training samples. Along with the algorithm and source code, they also released a data set of word vectors learned from a database of 100MM articles. These word vectors were capable, famously, of capturing analogies such as “A queen is to a king like a man is to a woman”. To better understand how this model works we are going to take a closer look at a simplified version of it that can generate the same results.

To learn word vectors a shallow neural network architecture is used. This model takes as input one word and tries to predict as output another word. To train the model many such pairs of words (training samples) are passed to the model so that over many iterations it can learn how to map words to one another.

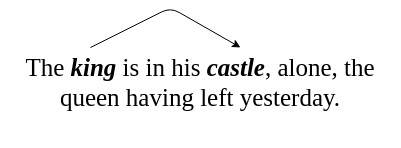

Given a piece of text the model selects words that found themselves in the vicinity of one another. Take the following example:

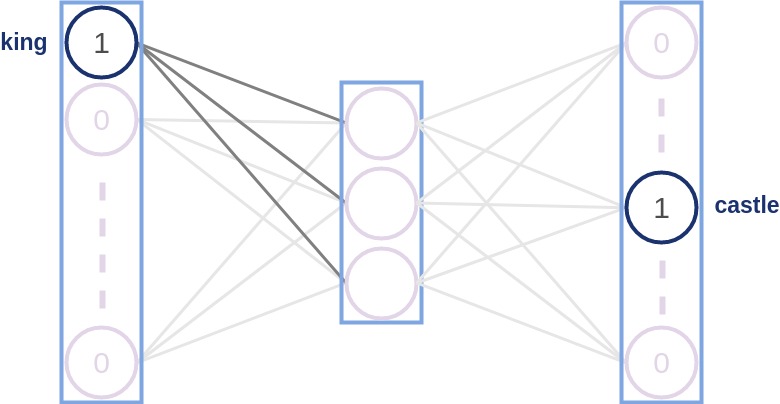

In this case, “king” is a context word for “castle”. A training pair then becomes king-castle i.e. given word “king” try to predict the word “castle”. The neural network behind this model tries to best learn this mapping. Continuing with this example, the model’s network initially looks like this:

Note the connections between the first and second layers. Each of those lines has a number associated with it (normally called a “weight”). Those numbers taken together form a vector. This is the “king” word vector we want to learn. This vector is randomly initialized and doesn’t offer much information at first. As training progress and the network updates its weights to get better and better at producing this mapping, the weights change and in the end, the network might look something like this:

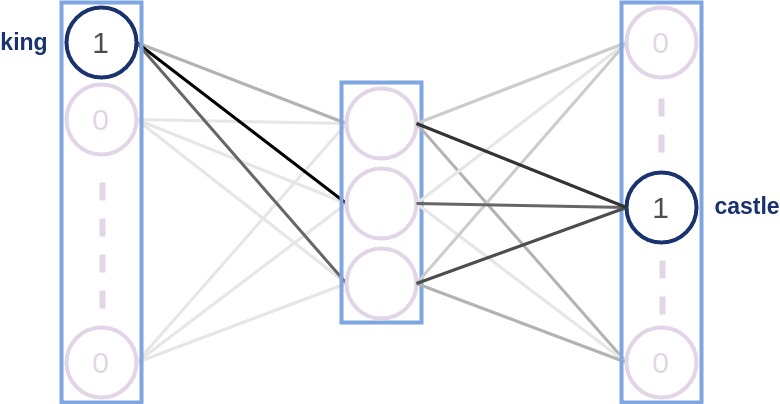

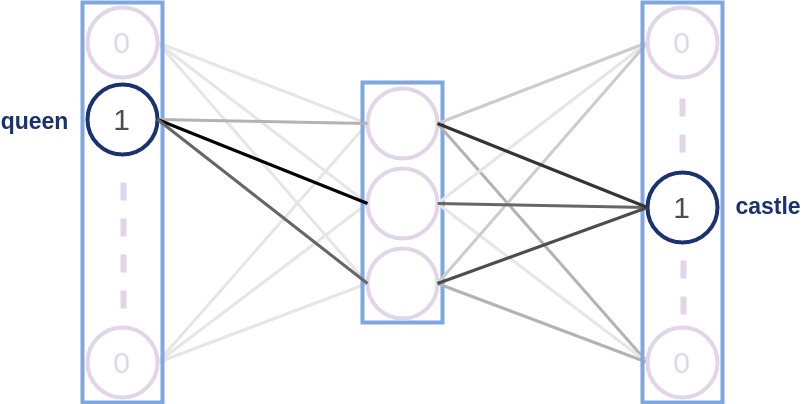

Note the different coloring of each line. The weights have updated and the connections are now the strongest along with those links that map the word “king” to the word “castle”. The network has learned to connect these two words. Is this, however, useful in and of itself? Not really. What makes this model really useful is that the mapping between words similar to “king” and the same “castle” word is going to look similar. Here’s what the learned mapping between “queen” and “castle” might look like:

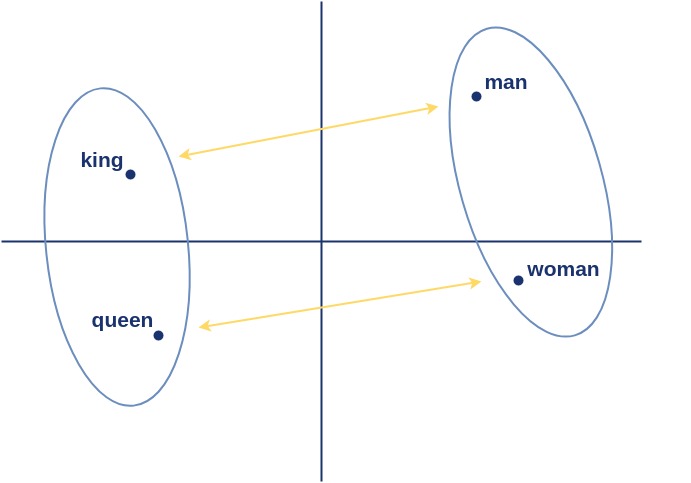

In the end, we don’t really care how good the model is at predicting these word relationships. What we care about are the updates that it applies to the weights vectors. The vectors the model outputs capture interesting relationships between words. Here’s what a 2D projection of the word vectors of “king”, “queen”, “man”, “woman” looks like:

The model captures not only the intuitive idea that “king” and “queen” are similar in meaning, just like “man” and “woman” are, but it also maintains interesting relationships across word clusters. It learns that in the king-queen cluster the word “king” is kinda like the word “man” in the man-woman cluster. This allows it to answer questions like “a king is to a man like a queen is to a ???” where the obvious answer is “woman”. Another interesting relationship it learns is that between capitals and countries (i.e. Rome-Italy, Bucharest-Romania, etc). Most importantly, however, it broadly captures the semantics of words.

Document clustering

We’ve computed our word vectors and we now have a powerful tool for representing words. Resolving analogies and figuring out synonyms is great but these are not the most pressing business problems to solve. The real power of these word vectors becomes apparent when applied to challenging tasks in natural language processing.

A challenging problem we will explore in the rest of this article is that of document classification. Imagine you have a large collection of documents and would like to automatically divide this collection into various semantically meaningful categories. A modern method for achieving just that is the word mover distance. Introduced in 2015, it uses word embeddings to measure the similarity of two documents. It achieves state-of-the-art results on unsupervised document clustering tasks.

The word mover distance (WMD) is a variation of a transport optimization problem called the earth mover distance. Imagine you have a bunch of dirt piles scattered around and a bunch of holes to fill. The earth mover distance computes the minimum amount of effort required to fill all holes by transporting all the dirt around. If you think of words in one document as piles of earth and words in another document as holes to fill the WMD measures the least amount of effort required to “move” the words of one document to those of another.

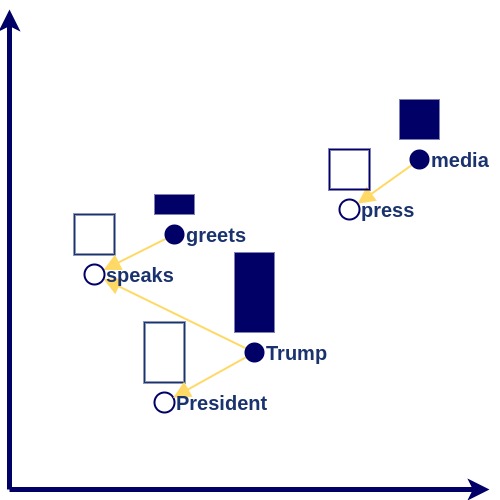

How big of a metaphorical hole/pile of dirt each word holds is given by the classical bag-of-words representation we briefly covered in the beginning. Words with higher normalized frequency counts have higher weights. The distance between words is then computed as the Euclidean distance between the word vectors of the two words. Graphically this can be displayed as:

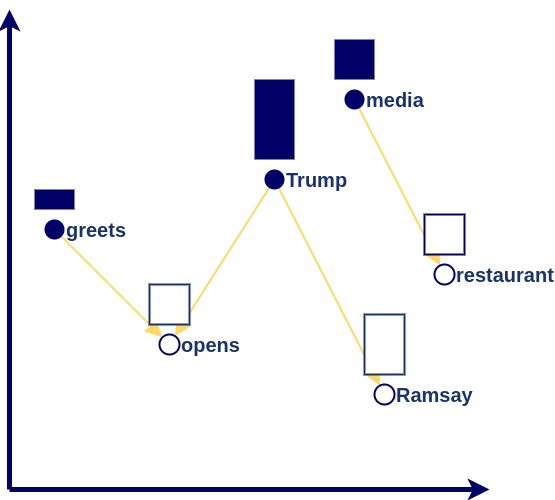

Note how the model discovers the semantic relationship between the two sentences, “The President speaks to press” and “Trump greets media”, despite there being no word overlap. A much different sentence, like “Ramsay opens a new restaurant”, is farther away in this space and thus not as similar:

The word mover distance produces state-of-the-art results on a number of document clustering methods. Without any supervision, the method is able to go from a bunch of text to understanding the similarity between documents and automatically split collections into semantically relevant categories.

If you have any large unstructured collection of documents you can automatically produce semantic categorical filters by running them through this model. Furthermore, you can enhance your searches across the collection by having this model return documents where the semantics are similar to that of the query even if word overlap is missing.

Supervised text vectors

While unsupervised methods can be quite spectacular in how much they can achieve with very little human input there are still performance gains to be had if any labeling information is available. Imagine you have three documents that cover the same subject. The unsupervised method will naturally consider all three documents to be quite similar to one another. However, what if two of those documents were written by one author and the third by another? If you wish to account for this labeling, the supervised word mover distance is capable of taking in this extra information and transforming the word vectors of a document such that they account for the semantics associated with each label.

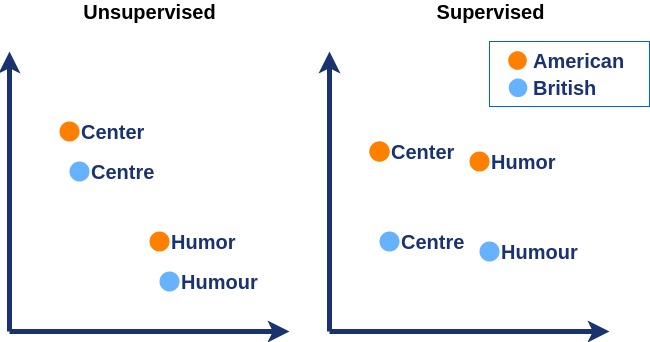

Take for example the words “humour” and “humor”. They are the British and American spellings of the same word. Word vectors learned without any supervision will be close to one another because these two words are identical in meaning and any difference in American vs British style is very hard to capture without supervision. However, if we were to tell the model that these two words have different labels the difference between them would accentuate. Here’s what that would look like:

When training labels are available the supervised word mover distance is a further improvement on the unsupervised one and produces impressive results across a number of datasets. This is a powerful method for classifying text and is particularly suited for problems where word overlap is limited (e.g. tweets, conversations).